This notebook contains an excerpt from the Python Programming and Numerical Methods - A Guide for Engineers and Scientists, the content is also available at Berkeley Python Numerical Methods.

The copyright of the book belongs to Elsevier. We also have this interactive book online for a better learning experience. The code is released under the MIT license. If you find this content useful, please consider supporting the work on Elsevier or Amazon!

< 20.1 Numerical Differentiation Problem Statement | Contents | 20.3 Approximating of Higher Order Derivatives >

Finite Difference Approximating Derivatives¶

The derivative \(f'(x)\) of a function \(f(x)\) at the point \(x=a\) is defined as:

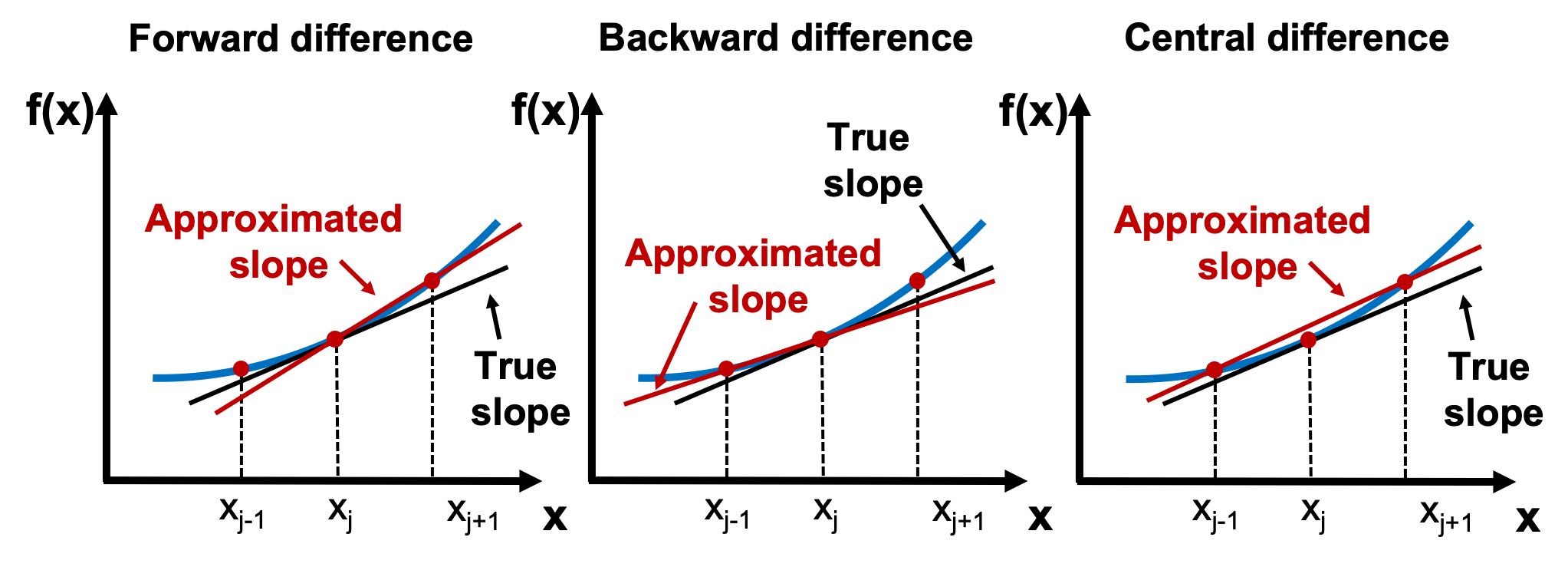

The derivative at \(x=a\) is the slope at this point. In finite difference approximations of this slope, we can use values of the function in the neighborhood of the point \(x=a\) to achieve the goal. There are various finite difference formulas used in different applications, and three of these, where the derivative is calculated using the values of two points, are presented below.

The forward difference is to estimate the slope of the function at \(x_j\) using the line that connects \((x_j, f(x_j))\) and \((x_{j+1}, f(x_{j+1}))\):

The backward difference is to estimate the slope of the function at \(x_j\) using the line that connects \((x_{j-1}, f(x_{j-1}))\) and \((x_j, f(x_j))\):

The central difference is to estimate the slope of the function at \(x_j\) using the line that connects \((x_{j-1}, f(x_{j-1}))\) and \((x_{j+1}, f(x_{j+1}))\):

The following figure illustrates the three different type of formulas to estimate the slope.

Finite Difference Approximating Derivatives with Taylor Series¶

To derive an approximation for the derivative of \(f\), we return to Taylor series. For an arbitrary function \(f(x)\) the Taylor series of \(f\) around \(a = x_j\) is $\( f(x) = \frac{f(x_j)(x - x_j)^0}{0!} + \frac{f^{\prime}(x_j)(x - x_j)^1}{1!} + \frac{f''(x_j)(x - x_j)^2}{2!} + \frac{f'''(x_j)(x - x_j)^3}{3!} + \cdots. \)$

If \(x\) is on a grid of points with spacing \(h\), we can compute the Taylor series at \(x = x_{j+1}\) to get

Substituting \(h = x_{j+1} - x_j\) and solving for \(f^{\prime}(x_j)\) gives the equation

The terms that are in parentheses, \(-\frac{f''(x_j)h}{2!} -\frac{f'''(x_j)h^2}{3!} - \cdots\), are called higher order terms of \(h\). The higher order terms can be rewritten as

where \(\alpha\) is some constant, and \(\epsilon(h)\) is a function of \(h\) that goes to zero as \(h\) goes to 0. You can verify with some algebra that this is true. We use the abbreviation “\(O(h)\)” for \(h(\alpha + \epsilon(h))\), and in general, we use the abbreviation “\(O(h^p)\)” to denote \(h^p(\alpha + \epsilon(h))\).

Substituting \(O(h)\) into the previous equations gives

This gives the forward difference formula for approximating derivatives as

and we say this formula is \(O(h)\).

Here, \(O(h)\) describes the accuracy of the forward difference formula for approximating derivatives. For an approximation that is \(O(h^p)\), we say that \(p\) is the order of the accuracy of the approximation. With few exceptions, higher order accuracy is better than lower order. To illustrate this point, assume \(q < p\). Then as the spacing, \(h > 0\), goes to 0, \(h^p\) goes to 0 faster than \(h^q\). Therefore as \(h\) goes to 0, an approximation of a value that is \(O(h^p)\) gets closer to the true value faster than one that is \(O(h^q)\).

By computing the Taylor series around \(a = x_j\) at \(x = x_{j-1}\) and again solving for \(f^{\prime}(x_j)\), we get the backward difference formula

which is also \(O(h)\). You should try to verify this result on your own.

Intuitively, the forward and backward difference formulas for the derivative at \(x_j\) are just the slopes between the point at \(x_j\) and the points \(x_{j+1}\) and \(x_{j-1}\), respectively.

We can construct an improved approximation of the derivative by clever manipulation of Taylor series terms taken at different points. To illustrate, we can compute the Taylor series around \(a = x_j\) at both \(x_{j+1}\) and \(x_{j-1}\). Written out, these equations are

and

Subtracting the formulas above gives

which when solved for \(f^{\prime}(x_j)\) gives the central difference formula

Because of how we subtracted the two equations, the \(h\) terms canceled out; therefore, the central difference formula is \(O(h^2)\), even though it requires the same amount of computational effort as the forward and backward difference formulas! Thus the central difference formula gets an extra order of accuracy for free. In general, formulas that utilize symmetric points around \(x_j\), for example \(x_{j-1}\) and \(x_{j+1}\), have better accuracy than asymmetric ones, such as the forward and background difference formulas.

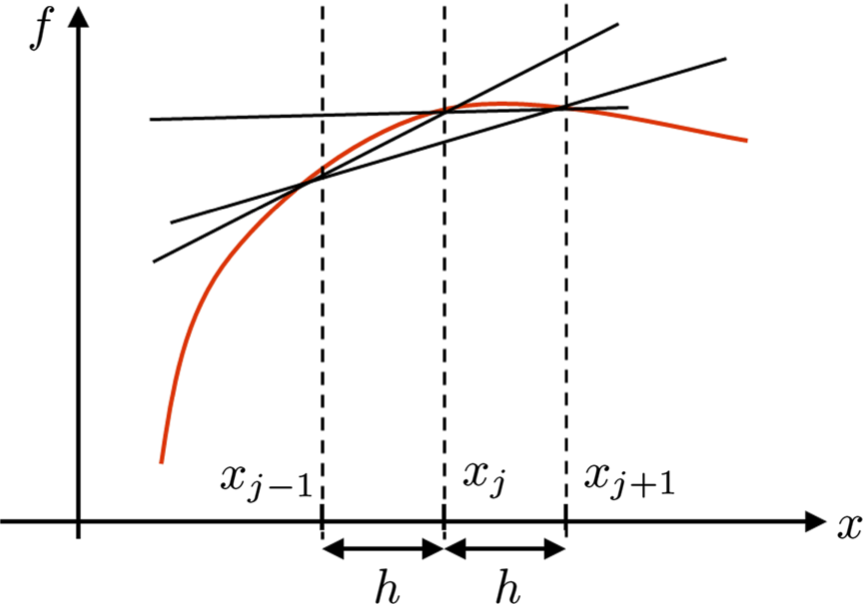

The following figure shows the forward difference (line joining \((x_j, y_j)\) and \((x_{j+1}, y_{j+1})\)), backward difference (line joining \((x_j, y_j)\) and \((x_{j-1}, y_{j-1})\)), and central difference (line joining \((x_{j-1}, y_{j-1})\) and \((x_{j+1}, y_{j+1})\)) approximation of the derivative of a function \(f\). As can be seen, the difference in the value of the slope can be significantly different based on the size of the step \(h\) and the nature of the function.

TRY IT! Take the Taylor series of \(f\) around \(a = x_j\) and compute the series at \(x = x_{j-2}, x_{j-1}, x_{j+1}, x_{j+2}\). Show that the resulting equations can be combined to form an approximation for \(f^{\prime}(x_j)\) that is \(O(h^4)\).

First, compute the Taylor series at the specified points.

To get the \(h^2, h^3\), and \(h^4\) terms to cancel out, we can compute

which can be rearranged to

This formula is a better approximation for the derivative at \(x_j\) than the central difference formula, but requires twice as many calculations.

TIP! Python has a command that can be used to compute finite differences directly: for a vector \(f\), the command \(d=np.diff(f)\) produces an array \(d\) in which the entries are the differences of the adjacent elements in the initial array \(f\). In other words \(d(i) = f(i+1) - f(i)\).

WARNING! When using the command np.diff, the size of the output is one less than the size of the input since it needs two arguments to produce a difference.

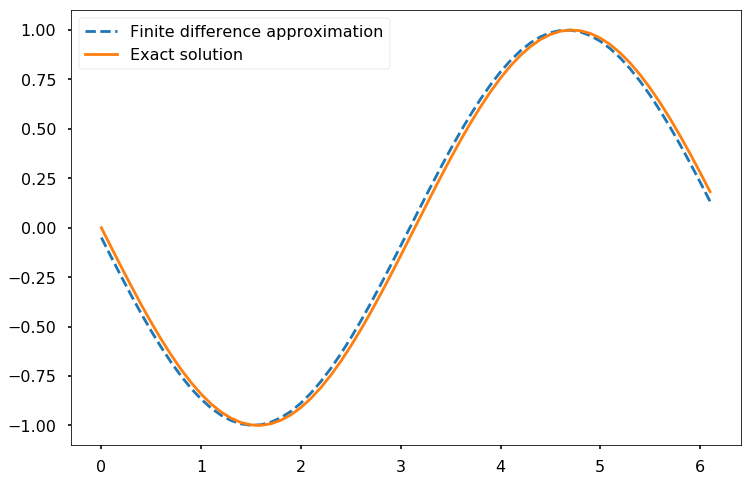

EXAMPLE: Consider the function \(f(x) = \cos(x)\). We know the derivative of \(\cos(x)\) is \(-\sin(x)\). Although in practice we may not know the underlying function we are finding the derivative for, we use the simple example to illustrate the aforementioned numerical differentiation methods and their accuracy. The following code computes the derivatives numerically.

import numpy as np

import matplotlib.pyplot as plt

plt.style.use('seaborn-poster')

%matplotlib inline

# step size

h = 0.1

# define grid

x = np.arange(0, 2*np.pi, h)

# compute function

y = np.cos(x)

# compute vector of forward differences

forward_diff = np.diff(y)/h

# compute corresponding grid

x_diff = x[:-1:]

# compute exact solution

exact_solution = -np.sin(x_diff)

# Plot solution

plt.figure(figsize = (12, 8))

plt.plot(x_diff, forward_diff, '--', \

label = 'Finite difference approximation')

plt.plot(x_diff, exact_solution, \

label = 'Exact solution')

plt.legend()

plt.show()

# Compute max error between

# numerical derivative and exact solution

max_error = max(abs(exact_solution - forward_diff))

print(max_error)

0.049984407218554114

As the above figure shows, there is a small offset between the two curves, which results from the numerical error in the evaluation of the numerical derivatives. The maximal error between the two numerical results is of the order 0.05 and expected to decrease with the size of the step.

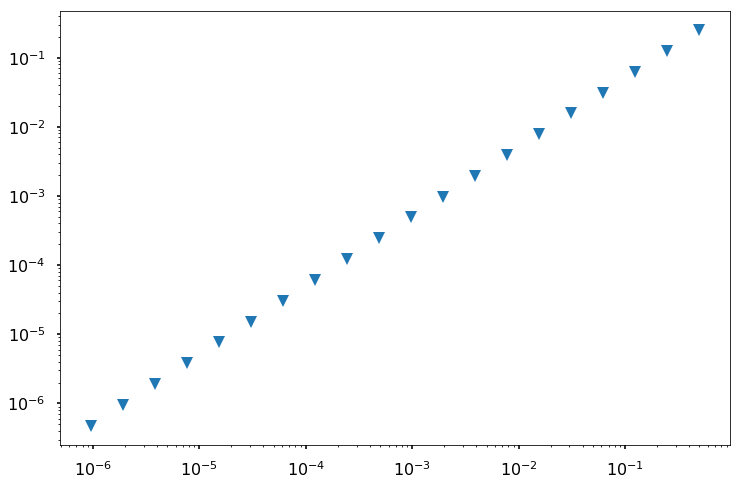

As illustrated in the previous example, the finite difference scheme contains a numerical error due to the approximation of the derivative. This difference decreases with the size of the discretization step, which is illustrated in the following example.

EXAMPLE: The following code computes the numerical derivative of \(f(x) = \cos(x)\) using the forward difference formula for decreasing step sizes, \(h\). It then plots the maximum error between the approximated derivative and the true derivative versus \(h\) as shown in the generated figure.

# define step size

h = 1

# define number of iterations to perform

iterations = 20

# list to store our step sizes

step_size = []

# list to store max error for each step size

max_error = []

for i in range(iterations):

# halve the step size

h /= 2

# store this step size

step_size.append(h)

# compute new grid

x = np.arange(0, 2 * np.pi, h)

# compute function value at grid

y = np.cos(x)

# compute vector of forward differences

forward_diff = np.diff(y)/h

# compute corresponding grid

x_diff = x[:-1]

# compute exact solution

exact_solution = -np.sin(x_diff)

# Compute max error between

# numerical derivative and exact solution

max_error.append(\

max(abs(exact_solution - forward_diff)))

# produce log-log plot of max error versus step size

plt.figure(figsize = (12, 8))

plt.loglog(step_size, max_error, 'v')

plt.show()

The slope of the line in log-log space is 1; therefore, the error is proportional to \(h^1\), which means that, as expected, the forward difference formula is \(O(h)\).

< 20.1 Numerical Differentiation Problem Statement | Contents | 20.3 Approximating of Higher Order Derivatives >